Summary of what happened:

- I have 2 sessions each with a T1w, T2w, and BOLD acquisition:

drwxrwxr-x - mpasternak 29 Jan 23:52 .

drwxrwxr-x - mpasternak 29 Jan 23:43 ├── V11

.rwxrwxrwx 1.8k mpasternak 12 Sep 2024 │ ├── sub-GRN296_ses-V11_acq-Philips3T3DT1_T1w.json

.rwxrwxrwx 7.9M mpasternak 12 Sep 2024 │ ├── sub-GRN296_ses-V11_acq-Philips3T3DT1_T1w.nii.gz

.rwxrwxrwx 1.7k mpasternak 12 Sep 2024 │ ├── sub-GRN296_ses-V11_acq-Philips3T3DT2_T2w.json

.rwxrwxrwx 7.3M mpasternak 12 Sep 2024 │ ├── sub-GRN296_ses-V11_acq-Philips3T3DT2_T2w.nii.gz

.rwxrwxrwx 2.7k mpasternak 18 Nov 2024 │ ├── sub-GRN296_ses-V11_task-rest_acq-Philips3T_bold.json

.rwxrwxrwx 35M mpasternak 16 Sep 2024 │ └── sub-GRN296_ses-V11_task-rest_acq-Philips3T_bold.nii.gz

drwxrwxr-x - mpasternak 30 Jan 10:25 ├── V12

.rwxrwxrwx 1.8k mpasternak 12 Sep 2024 │ ├── sub-GRN296_ses-V12_acq-Philips3T3DT1_T1w.json

.rwxrwxrwx 8.7M mpasternak 12 Sep 2024 │ ├── sub-GRN296_ses-V12_acq-Philips3T3DT1_T1w.nii.gz

.rwxrwxrwx 1.7k mpasternak 12 Sep 2024 │ ├── sub-GRN296_ses-V12_acq-Philips3T3DT2_T2w.json

.rwxrwxrwx 8.4M mpasternak 12 Sep 2024 │ ├── sub-GRN296_ses-V12_acq-Philips3T3DT2_T2w.nii.gz

.rwxrwxrwx 2.7k mpasternak 18 Nov 2024 │ ├── sub-GRN296_ses-V12_task-rest_acq-Philips3T_bold.json

.rwxrwxrwx 43M mpasternak 16 Sep 2024 │ └── sub-GRN296_ses-V12_task-rest_acq-Philips3T_bold.nii.gz

.rw-rw-r-- 360 mpasternak 29 Jan 23:52 └── V12_to_V11.txt

-

Intrasession, these are well aligned from the get-go. However, between sessions they are very far apart.

-

What I’m after: I’d like for the latter session to be brought roughly closer to the earlier session.

-

I have a text ITK transform file that rigid body registers the T1w of the latter session to the earlier one:

#Insight Transform File V1.0

#Transform 0

Transform: MatrixOffsetTransformBase_double_3_3

Parameters: 0.9975121222239797 0.06674008382751828 -0.022701489412543956 -0.06310718453215015 0.9889197095907575 0.1343701147858564 0.031417821070966113 -0.13260320383390728 0.9906711422159168 13.38436497025713 22.5374496279273 117.48154029742551

FixedParameters: 0 0 0

- Outcomes:

- T1w and T2w get transformed as expected. Their FoV, when plotting with

nilearnor opening with a program like MRICroGL, accomodates the transformation. - This does not happen to the BOLD. It looks like it does change in world space, but the FoV is not updated to allow the image to be viewed.

- T1w and T2w get transformed as expected. Their FoV, when plotting with

Command used (and if a helper script was used, a link to the helper script or the command generated):

Here is a link to an HTML export of a jupyter notebook that attempted to use antsApplyTransforms: https://jade-trula-95.tiiny.site

I have followed the guidelines of using -e 3 for BOLD timeseries as mentioned in this Github issue: antsApplyTransforms on 4D BOLD images · Issue #1717 · ANTsX/ANTs · GitHub

For quick reference, here is the BOLD-specific command:

antsApplyTransforms \

-d 3 \

-e 3 \

-i /home/mpasternak/Documents/TEST/V12/sub-GRN296_ses-V12_task-rest_acq-Philips3T_bold.nii.gz \

-r /home/mpasternak/Documents/TEST/V11/sub-GRN296_ses-V11_task-rest_acq-Philips3T_bold.nii.gz \

-t /home/mpasternak/Documents/TEST/V12_to_V11.txt \

-o /home/mpasternak/Documents/TEST/V12/sub-GRN296_ses-V12_task-rest_acq-Philips3T_bold_registered.nii.gz \

-n LanczosWindowedSinc --float

Version:

v2.5.4

Screenshots / relevant information:

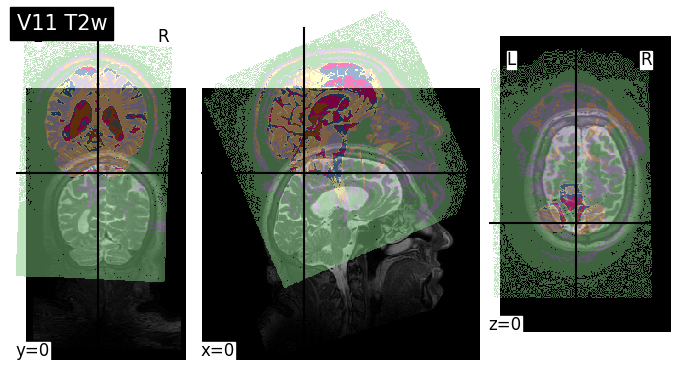

Example of initial difference in inter-session layout for T2w (overlay is V12 same-modality):

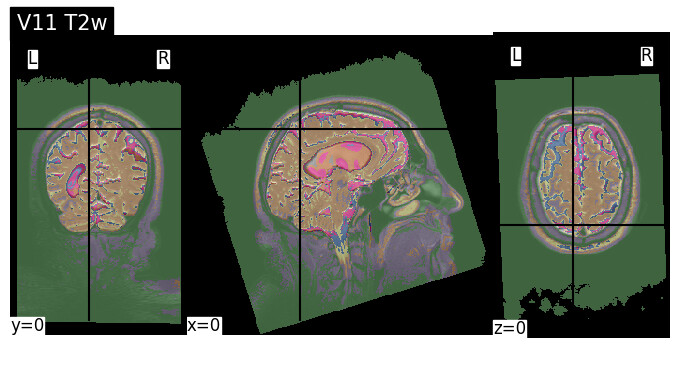

After registration, everything works for T2w (and T1w):

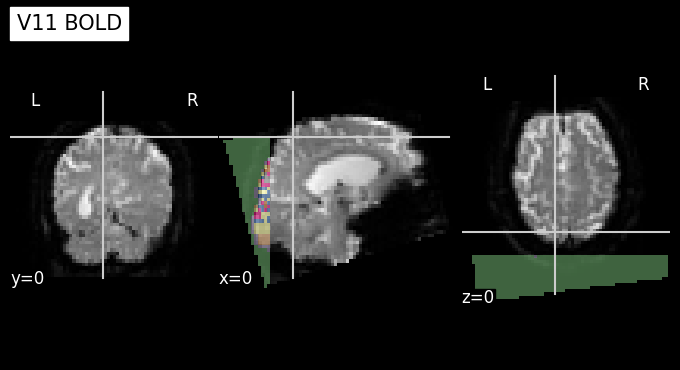

The same cannot be said for BOLD: