Hi neurostars!

I am investigating the possibility to write a simple tool to check MRI protocol compliance for continuous integration of new MRI session in a multi-site/multi-vendor BIDS dataset.

I looked into GitHub - Open-Minds-Lab/mrQA: mrQA: tools for quality assurance in medical imaging datasets, including protocol compliance but that doesn’t seem to exactly fit our needs.

Are there existing tools that I am not aware of that have similar function?

I thought about integrating it in the heudiconv heuristic, but I wouldn’t have access to all tags, and would make it more complex.

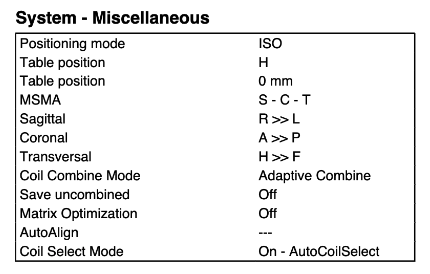

I would like a tool much like the BIDS validator that would check which scanner was used for a new session (using Manufacturer/ManufacturersModelName/StationName/SoftwareVersion) and then apply a set of rules on a subset of the fields of the sidecar json of each series from the new session.

It should also check that all required sequences are present, while allowing some sequences to be set as optional.

Research led me to json-schema and pydantic for schema validation. The idea: with a set of session from each scanner in the study, one could run a config tool that pre-generates a json-schema ( with conditional sub-schema dependent on site/model/manufacturer). This tool would select common json tags that should be validated (logically, sequence related ones) and create a set of rules, allowing approximate numerical matching if necessary. The resulting schema json could then be edited to tweak details before being pushed in “production”. For instance, one might want to manually set the minimum number of TRs for a fMRI run (would require dcmstack fields).

Additional tags could configure the required/optional sequences and/or how many of these are required (for instance, if one wants 3 fmap runs, one for each task).

It would result in an ignored hidden folder within the BIDS structure (eg .bids-check/) that would follow the regular bids structure with json containing the schema (eg .bids-check/sub-ref/ses-{pre,post}/{anat,func}/{**entities}_{suffix}.json), Ideally the schema could be queried with pybids.

Then a new BIDS session could be validated against the schema and give a report of mismatch, and returns an error code if any mismatch occurs. For each new sidecar, the tool would find the corresponding schema with BIDS entities to validate against.

The idea would be to integrate this tool as a CI action that runs on PR adding new sessions to a BIDS datalad (aside bids-validator or other tests).

Before going further, I wanted to make sure it makes sense, if there are any design flaws, or if it is missing anything? (eg. This doesn’t cover bvecs/bvals compliance, which would require a separate validation.)

Thanks for your valuable feedback!

Cheers,

Basile