I’m wondering if someone already built the derivatives of 7-tesla fMRI resting-state data from fmriprep and willing to share?

Well there is this one on openneuro: but I can’t see any fMRIprep data with it:

https://openneuro.org/datasets/ds001168/versions/1.0.1

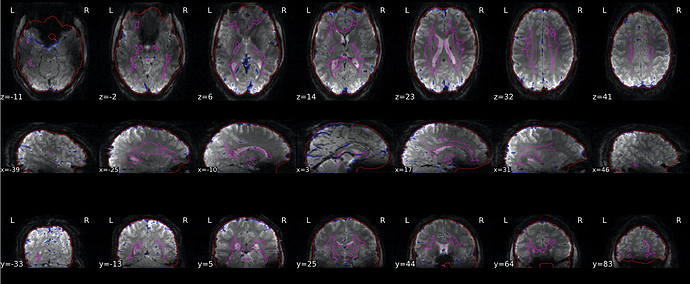

And it comes with an MP2RAGE anat which I think fMRIprep “does not like” (or at least that was the case last time I checked) because of the “salt and pepper” background noise of the image.

About the background noise in MP2RAGE UNI images mentioned by @Remi-Gau , there are several ways to handle this. Just some references from the top of my head:

- See Figure 2 from: O’Brien K.R. et al. (2014) Robust T1-Weighted Structural Brain Imaging and Morphometry at 7T Using MP2RAGE. PLoS ONE 9(6): e99676. DOI:10.1371/journal.pone.0099676.

- One implementation that I am familiar with is here in LayNii.

- Another solution that uses AFNI tools is here.

@Remi-Gau Glad you mentioned this issue. I wasn’t aware of it. I got fmriprep derivatives but it doesn’t work well as you pointed out.

@ofgulban thanks for the link and the code. For the cpp code, I need to run it over all MP2RAGE images across subjects, then re-run fmriprep?

@h_b, I would guess so. More you mitigate the noise beforehand the better (in theory). However, some caution is needed as you would be reintroducing some amount of the bias field back to the image, at the expense of mitigating the background noise. This bias field (also called intensity inhomogeneity field), can be corrected by some other algorithm like N4 bias field correction or many other similar methods. By the way, I assume you only want a decent segmentation by using these MP2RAGE UNI images and not interested in quantitative imaging aspect of MP2RAGE.

@ofgulban well, I am only interested in the gray matter. My goal is to extract the gray matter from fMRI data. Not sure if the other parameters you mentioned would have an effect on my goal?

Then, I would guess that mitigating the background noise at the beginning would help with the rest of the processing as the image would look more like T1w MPRAGE.

Another way would be to derive your brain mask from MP2RAGE INV2 images and apply it onto the UNI image. This way you basically mask out the noise outside + skull. Which seems to cause the most trouble for you, looking at the screenshot.

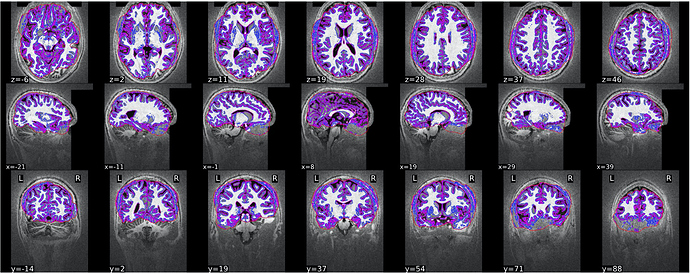

@ofgulban Thanks for following up. I think the output derivative works fine for BOLD data as show below. Instead of re-running fmriprep which will take too much time, I’d extract the gray matter mask from MPRAGE images using NIGHRES and then apply it on the pre-processed BOLD images obtained from fmriprep. What do you think?

Might work. Though I would rather not assure you on something without knowing the details of the project and what kind of error tolerance is desired for further analyses. Which might be too much to judge here by looking at a few screenshots.

You will get there  , hope it helped.

, hope it helped.