We are introducing a saliency map method that produces meaningful attributions for time-series ML models. Instead of restricting explanations to the raw time domain, our approach generates saliency maps in relevant domains such as frequency or ICA components – improving interpretability when applying deep models to neural time-domain signals.

Why it matters

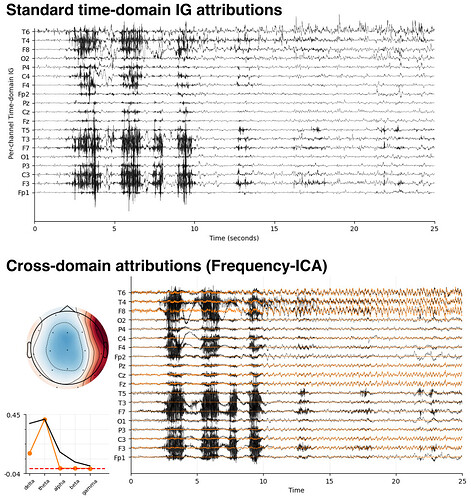

Standard attribution methods (e.g. Integrated Gradients) yield point-wise importance in the time domain, which can be difficult to interpret in neuroscience. Our method extends this idea to domains that are more aligned with scientific practice.

Key Features

Cross-Domain Saliency Maps is an open-source toolkit that:

- Provides frequency and ICA domain attributions out-of-the-box

- Extends to any invertible transform with a differentiable inverse

- Works plug-and-play with PyTorch and TensorFlow, no retraining required

- Demonstrates utility on EEG seizure detection and other datasets

Example outputs

Cross-domain attributions reveal more interpretable patterns by surfacing features in frequency bands and in independent components.

Try it out:

- What does your deep model see in your EEG? (Colab demo) Google Colab

- Get the code & run it on your models (GitHub repo) https://github.com/esl-epfl/cross-domain-saliency-maps

- Read the full story (arxiv preprint) https://arxiv.org/pdf/2505.13100

We’d be happy to hear your thoughts and experiences applying this to time-series neural data.