What happened?

We recently installed fMRI prep and successfully ran two test participants with no errors reported. We then attempted to run a participant with a pediatric template and it produced file not found errors. We then reverted to using the default MNI adult template but the same errors were produced. We suspect that the errors might be related to template files being moved when attempting to switch to the pediatric template (I do not know what files were moved, someone else did this).

The errors do not stop the final html file from being produced, and the results in that file look roughly the same as the ones from the original test participants that had no errors in them.

We have run through all of the system checks for fMRIprep and solved the few issues that arose with that, rerun a participant, and produced the same error ~30 minutes into the run.

We are using a data set downloaded from OpenNeuro (link if it is helpful)

What command did you use?

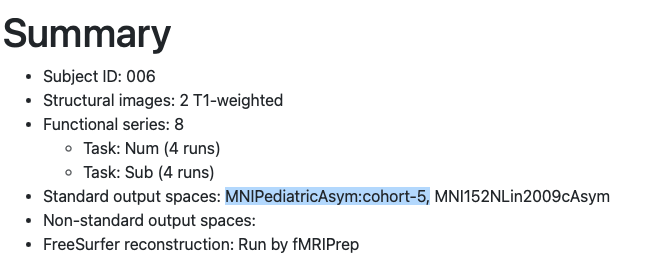

RUNNING: docker run --rm -e DOCKER_VERSION_8395080871=25.0.2 -it -v /Volumes/ITALY/license.txt:/opt/freesurfer/license.txt:ro -v /Volumes/ITALY/raw:/data:ro -v /Volumes/ITALY/derivatives:/out -v /Volumes/ITALY/tmp:/scratch nipreps/fmriprep:23.2.0 /data /out participant --skip_bids_validation --participant_label 016 --dummy-scans 0 -w /scratch --output-spaces MNI152NLin2009cAsym

What version of fMRIPrep are you running?

23.2.0

How are you running fMRIPrep?

Docker

Please copy and paste any relevant log output.

Node: fmriprep_23_2_wf.sub_016_wf.anat_fit_wf.ds_template_wf.ds_t1w_ref_xfms

Working directory: /scratch/fmriprep_23_2_wf/sub_016_wf/anat_fit_wf/ds_template_wf/ds_t1w_ref_xfms

Traceback (most recent call last):

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/nipype/pipeline/engine/utils.py", line 94, in nodelist_runner

result = node.run(updatehash=updatehash)

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 543, in run

write_node_report(self, result=result, is_mapnode=isinstance(self, MapNode))

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/nipype/pipeline/engine/utils.py", line 208, in write_node_report

report_file.write_text("\n".join(lines), encoding='utf-8')

File "/opt/conda/envs/fmriprep/lib/python3.10/pathlib.py", line 1154, in write_text

with self.open(mode='w', encoding=encoding, errors=errors, newline=newline) as f:

File "/opt/conda/envs/fmriprep/lib/python3.10/pathlib.py", line 1119, in open

return self._accessor.open(self, mode, buffering, encoding, errors,

FileNotFoundError: [Errno 2] No such file or directory: '/scratch/fmriprep_23_2_wf/sub_016_wf/anat_fit_wf/ds_template_wf/ds_t1w_ref_xfms/mapflow/_ds_t1w_ref_xfms1/_report/report.rst'