Hi @ChrisGorgolewski

Thanks for that. Below are the answers to your questions.

OS: Centos 7, kernel: 3.10.0-693.17.1.el7.x86_64 #1 SMP Thu Jan 25 20:13:58 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

Docker version: Singularity version 3.0.1 was used to convert the Docker image of fMRIPrep (version 1.2.5)

shell script: had directories and the following commands:

module load fmriprep/1.2.5

unset PYTHONPATH

Run fmriprep

fmriprep

–participant-label ${subject}

–output-space {T1w,template}

–t2s-coreg

–mem_mb 80000

-n-cpus 8

–fs-license-file ${fslicense}

–fs-no-reconall

–use-syn-sdc

-w ${workdir}

${bidsdir}

${derivsdir}

–skip_bids_validation

participant

Hi, just wanted to state that I’m having the same issue. I’m running fmriprep on a supercomputer with 100GB alloted for one participant (pilot testing, too), and I’m getting the same error. Any updates on this?

I experience the same error (during the call to Bids Validator) with the docker fmriprep version 1.2.4 and 1.2.5 (1.2.3 runs fine) on Ubuntu 16.04.5 LTS, on a machine with 32Gb of RAM, even with a small dataset (one participant from the YALE-trt dataset)

PS: using option --skip-bids-validation, docker fmriprep 1.2.5 runs fine, hence the issue is definitely with the BIDS validator

@jcrdubois @pettitta - any chance you could share a small dataset that consistently triggers this issue for you?

I sent you a message with a link to the dataset. Please let me know if you need anything else!

ETA: I’ve also run using fmriprep 1.2.5 and 1.2.4 and the only way to get it to work for both versions is to add the --skip_bids_validation

1 Like

I fixed the issue by fixing a naming error: the bold files were named sub-{}_ses-{}_run-{}_task-rest_bold.nii.gz instead of sub-{}_ses-{}_task-{}_run-{}_bold.nii.gz, which meant they did not comply with the BIDS specification.

It thus appears that the BIDS validator crashes with an out-of-memory error in versions 1.2.4 and 1.2.5 in some cases when the data is not BIDS compliant?

I can send you the original, offending data if it is useful, let me know.

@jcrdubois thanks for this. I will try this and keep you guys posted

Hi @jcrdubois

I’ve gone through the BIDS spec doc again, and tried adding in “run-1” and / or “ses-1” to my naming system but I kept running into the same memory error. I’ve attached examples below.

Also, is there a way you could share a screen shot showing how you’ve named your ME-files?

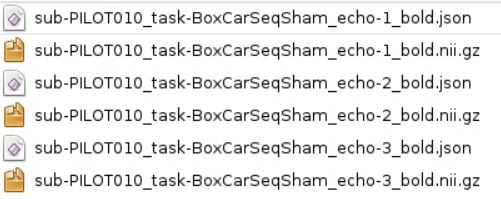

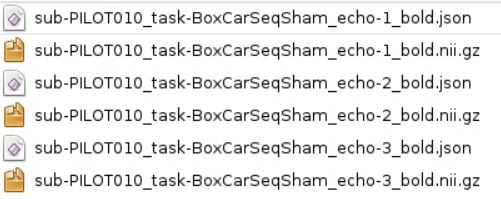

Example with no run or ses number:

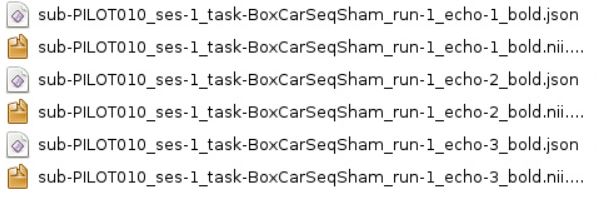

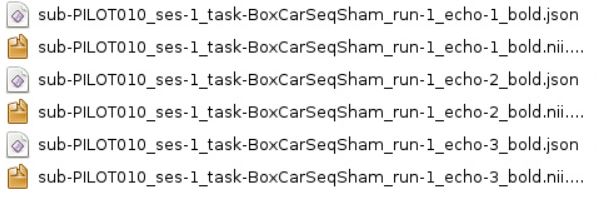

Run and ses number added:

Thank you for your help. I’m still learning the ropes with ME-data and fMRI in general and all your replies are very much appreciated.

Cheers,

Thapa

Could you try using the standalone validator (https://github.com/bids-standard/bids-validator) with the environment variable

NODE_OPTIONS="--max-old-space-size=4096"

?

Hi @TribikramT my files are named exactly like I specified above,

sub-{}_ses-{}_task-{}_run-{}_bold.nii.gz

(it is not ME data – I realize that this thread was originally about ME data)

Does your data pass the online BIDS validator without errors now? In my case, v 1.2.4 and 1.2.5 crashed when my data was not BIDS compliant. This is an issue to look into and fix (@ChrisGorgolewski should I open an issue on github for this?), but in the meantime you should ensure your data is BIDS compliant and hopefully you won’t run into the memory error.

.

Hi All,

Just an update.

fMRI Prep was able to run with no memory error and STC was applied. I relocated the files to a simple folder structure (tree structure: <project_name> - - <[rawdata] [derivatives] [temp]>) instead of <project_name> - - <pilot_testing> - -<fmriprep_versions> - -<sub_ID> - -<[rawdata] [derivatives] [temp]>.

I am not sure why this approach solved the issue. However, fMRIPrep v1.2.5 now works on my ME-data. Yay!

The way I was able to determine this solution was by first finding out in what folder structure the BIDS validator worked (web version and command line version). Once, I determined where it worked, I redirected the fMRI Prep script to work off data in that folder, and it worked!

Thank you very much for your help. Much appreciated.

Cheers,

Thapa

1 Like