Summary of what happened:

Hello,

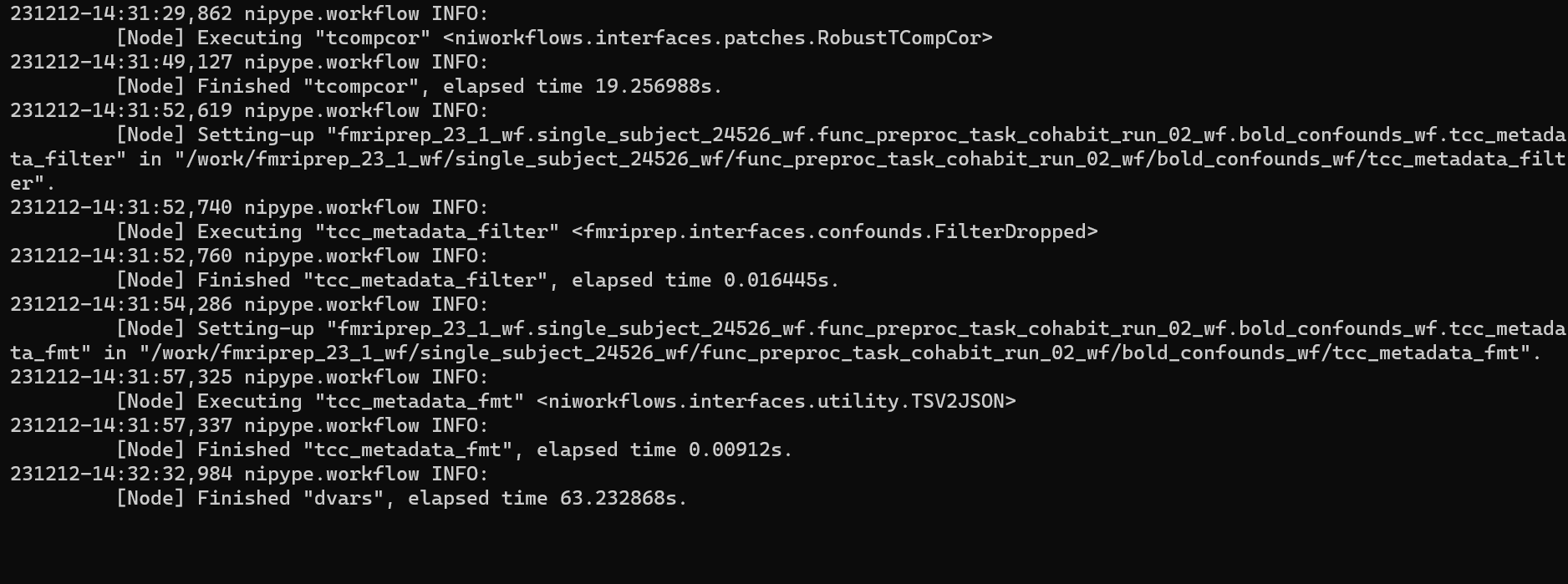

When I run fmriprep, some of my sessions are freezing about 30 minutes in, usually at this point of the pipeline:

231212-14:32:32,984 nipype.workflow INFO:

[Node] Finished "dvars", elapsed time 63.232868s.

(screenshot below)

It has been the case with one session that pressing the enter bar a number of times has caused it to finally continue (even then, after about 15 minutes or maybe longer of delay) but with other sessions it has continued to be stuck. When I then cancel the run, and then immediately restart it, I get the following error fairly quickly:

Traceback (most recent call last):

File "/opt/conda/envs/fmriprep/bin/fmriprep", line 8, in <module>

sys.exit(main())

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/fmriprep/cli/run.py", line 227, in main

failed_reports = generate_reports(

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/fmriprep/reports/core.py", line 96, in generate_reports

report_errors = [

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/fmriprep/reports/core.py", line 97, in <listcomp>

run_reports(

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/fmriprep/reports/core.py", line 79, in run_reports

return Report(

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/niworkflows/reports/core.py", line 307, in __init__

self._load_config(Path(config or pkgrf("niworkflows", "reports/default.yml")))

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/fmriprep/reports/core.py", line 48, in _load_config

self.index(settings["sections"])

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/niworkflows/reports/core.py", line 394, in index

self.errors = [read_crashfile(str(f)) for f in error_dir.glob("crash*.*")]

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/niworkflows/reports/core.py", line 394, in <listcomp>

self.errors = [read_crashfile(str(f)) for f in error_dir.glob("crash*.*")]

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/niworkflows/utils/misc.py", line 184, in read_crashfile

return _read_txt(path)

File "/opt/conda/envs/fmriprep/lib/python3.10/site-packages/niworkflows/utils/misc.py", line 241, in _read_txt

cur_key, cur_val = tuple(line.split(" = ", 1))

ValueError: not enough values to unpack (expected 2, got 1)

Sentry is attempting to send 1 pending events

Waiting up to 2 seconds

Press Ctrl-C to quit

Also, my code is set to run using fmriprep version 22.1.0. When I run this, it informs me that I am using a ‘dirty’ version. I don’t know why this is the case, as I don’t think it ever said this before. What does ‘dirty version’ mean, an incomplete version of fmriprep 22.1.0? Or it is outdated so we can’t use 22.1.0 as normal?

Furthermore, when I set the code to use the latest fmriprep version, I still get this error (though it doesn’t say that I am using the ‘dirty’ version).

Command used (and if a helper script was used, a link to the helper script or the command generated):

docker run -ti --rm \

-v "/mnt/h/SPM_data/445/bids/:/data:ro" \

-v "/mnt/h/SPM_data/445/bids/derivatives/fmriprep:/out" \

-v "/mnt/h/tmp/:/work"\

-v "/mnt/h/SPM_data/445/:/fslic:ro" \

-v "/mnt/h/SPM_data/445/:/plugin:ro" \

nipreps/fmriprep:22.1.0 /data /out participant \

--participant-label 25336 \

-w /work \

--fs-license-file /fslic/license.txt \

--output-spaces anat MNI152NLin2009cAsym fsnative \

--use-plugin /plugin/plugin_mem.yml \

--stop-on-first-crash \

--ignore sbref fieldmaps \

--bold2t1w-init header \

--skip-bids-validation

Version:

fMRIPrep v23.0.2 - but in my code I set this to either 22.1.0 (as consistent with the rest of my successfully run dataset) or change the code to latest version 23.1.4

Environment (Docker, Singularity, custom installation):

Docker. WSL ubuntu.

Data formatted according to a validatable standard? Please provide the output of the validator:

We validated the data using dcm2bids.

Relevant log outputs (up to 20 lines):

Just some general observations:

- ‘func’ derivatives file output shows that it has produced files only for the first run (my session has two runs)

- A session that I ran successfully using the latest version of fmriprep ran very slowly. It kept lagging at numerous points. My CPU ranges from around 50-100% when frmiprep is running. Is it possible that my computer spec is not high enough for larger sessions? It is strange to me that I encounter this problem with some sessions. We are processing high resolution data, I never had this issue with low resolution data.

Many thanks for any help!