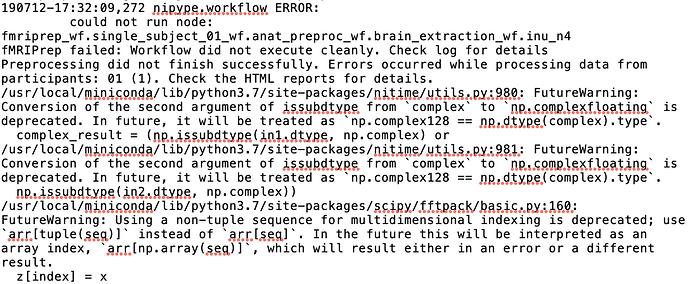

Here’s the entirety of the crash log:

Node: fmriprep_wf.single_subject_01_wf.anat_preproc_wf.brain_extraction_wf.inu_n4

Working directory: /gpfs/group/xxl213/default/Rosaleen/no-reconall-2/reports/fmriprep_wf/single_subject_01_wf/anat_preproc_wf/brain_extraction_wf/inu_n4

Node inputs:

args =

bias_image =

bspline_fitting_distance = 200.0

bspline_order =

convergence_threshold = 1e-07

copy_header = True

dimension = 3

environ = {‘NSLOTS’: ‘8’}

input_image = [’/gpfs/group/xxl213/default/Rosaleen/no-reconall-2/reports/fmriprep_wf/single_subject_01_wf/anat_preproc_wf/brain_extraction_wf/truncate_images/mapflow/_truncate_images0/sub-01_T1w_ras_valid_maths.nii.gz’]

mask_image =

n_iterations = [50, 50, 50, 50]

num_threads = 8

output_image =

save_bias = False

shrink_factor = 4

weight_image =

Traceback (most recent call last):

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/pipeline/plugins/multiproc.py”, line 69, in run_node

result[‘result’] = node.run(updatehash=updatehash)

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/pipeline/engine/nodes.py”, line 472, in run

result = self._run_interface(execute=True)

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/pipeline/engine/nodes.py”, line 1259, in _run_interface

self.config[‘execution’][‘stop_on_first_crash’])))

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/pipeline/engine/nodes.py”, line 1181, in _collate_results

(self.name, ‘\n’.join(msg)))

Exception: Subnodes of node: inu_n4 failed:

Subnode 0 failed

Error: Traceback (most recent call last):

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/pipeline/engine/utils.py”, line 99, in nodelist_runner

result = node.run(updatehash=updatehash)

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/pipeline/engine/nodes.py”, line 472, in run

result = self._run_interface(execute=True)

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/pipeline/engine/nodes.py”, line 563, in _run_interface

return self._run_command(execute)

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/pipeline/engine/nodes.py”, line 643, in _run_command

result = self._interface.run(cwd=outdir)

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/interfaces/base/core.py”, line 375, in run

runtime = self._run_interface(runtime)

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/interfaces/ants/segmentation.py”, line 438, in _run_interface

runtime, correct_return_codes)

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/interfaces/base/core.py”, line 758, in _run_interface

self.raise_exception(runtime)

File “/usr/local/miniconda/lib/python3.7/site-packages/nipype/interfaces/base/core.py”, line 695, in raise_exception

).format(**runtime.dictcopy()))

RuntimeError: Command:

N4BiasFieldCorrection --bspline-fitting [ 200 ] -d 3 --input-image /gpfs/group/xxl213/default/Rosaleen/no-reconall-2/reports/fmriprep_wf/single_subject_01_wf/anat_preproc_wf/brain_extraction_wf/truncate_images/mapflow/_truncate_images0/sub-01_T1w_ras_valid_maths.nii.gz --convergence [ 50x50x50x50, 1e-07 ] --output sub-01_T1w_ras_valid_maths_corrected.nii.gz --shrink-factor 4

Standard output:

Standard error:

Killed

Return code: 137