Title: Markerless gesture-recognition and motion-capture Ml/AI-based tool to drive music and speech generation, and develop neuroscientific/psychological theories of music creativity and music-movement-dance interactions

Mentors: Louis Martinez <louis.martinez@telecom-paris.fr>, Yohai-Eliel Berreby <yohaiberreby@gmail.com>, and Suresh Krishna <suresh.krishna@mcgill.ca>

Skill level: Intermediate - Advanced

Required skills: Comfortable with Python and modern AI tools. Experience with image/video processing and using deep-learning based image-processing models. Familiarity with C/C++ programming, low-latency sound generation, image processing, and audiovisual displays, as well as MediaPipe is an advantage, though not necessary. Max/CSound/PureData familiarity preferred.

Time commitment: Full time (350 hours)

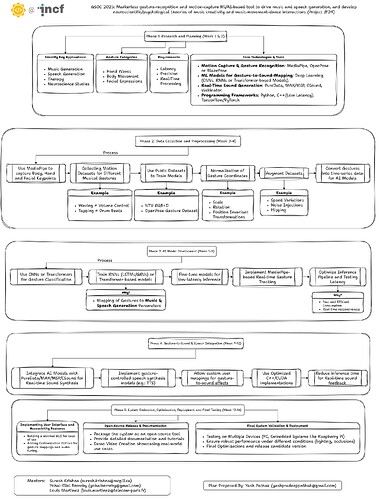

About: Last year, we developed GestureCap (GSoC 2024 report · GitHub), a tool that uses markerless gesture-recognition and motion-capture (using Google’s MediaPipe) to create a working framework whereby movements can be translated into sound with short-latency, allowing for example, gesture-driven new musical instruments and dancers who control their own music while dancing.

Aims: This year, we aim to build on this initial proof-of-concept to create a usable tool that enables gesture-responsive music and speech generation, as well as characterize the low-latency properties of this system and the sense of agency that enables. The development of GestureCap will facilitate both artistic creation, as well as scientific exploration of multiple areas, including for example - how people engage interactively with vision, sound, and movement and combine their respective latent creative spaces. Such a tool will also have therapeutic/rehabilitative applications in populations of people with limited ability to generate music and in whom agency and creativity in producing music have been shown to produce beneficial effects.

Website: GSoC 2024 report · GitHub

Tech keywords: Sound/music generation, Image processing, Python, MediaPipe, Wekinator, AI, Deep-learning