Hello Monica, it is not clear yet that we will offer this project again in 2026. However, if you make any kind of substantial progress towards creating an ASL recognizer (using existing open-source resources or ones you build) based on Mediapipe input, we would be very interested in seeing a potential proposal and could set up a project based on that. However, this is quite ambitious. All the best. (please note that you would have to actually have a concrete plan that is likely to lead to something tangible in 3 months, and ideally have some elements already in place).

@suresh.krishna

Hello Sir, I hope you are doing well. I want to share some insight,

I am very excited about the GestureCap project. I’m a CS(AIML) student and researcher with a background in Adaptive AI and Network Threat Analysis (with two published papers in intelligent monitoring).

While my previous work was in cybersecurity, the core challenge was the same: detecting meaningful patterns in high-speed, noisy data streams. I am eager to apply my experience in real-time anomaly detection and signal processing to markerless gesture recognition and motion capture.

I am particularly interested in how we can use Agentic AI to refine the feedback loop between physical movement and speech/music generation. I’ve already started looking into the neuroscientific theories mentioned and would love to discuss how my background in adaptive systems can contribute to the motion-to-music pipeline.

Looking forward to connecting and learning more about the technical requirements!

I am sharing my LinkedIn and github repo so you can asses me, I hope we can work together if again projects are coming . We can discuss few things about modification and I have experience in open-source for long time.

I am also sharing my recent work on Adaptive AI for NIDS which is published which used Optuna, XGboost and DNN.

Linkedin → www.linkedin.com/in/ayushrai13

Github → ayu-yishu13 (Ayush Rai) · GitHub

NIDS → LinkedIn

Hugging Face → LinkedIn

@Ayush_kumar_rai - thank you for your interest. The gesture-to-music project is not very well-suited for remote work, for various reasons. However, if you were to , for example, develop a concrete, feasible and solid proposal for something like a sign-language recognizer based on gestural input from a camera, that is something we would be intrested in sponsoring/ Allt he best.

Having some sort of working demo is essential… not just a plan or project outline.

Hi @suresh.krishna,Thanks for the feedback. I totally get the remote work constraints on the music project, but I’m actually really excited about the Sign-Language Recognizer idea. I know demo provides better idea but i can tell u basic idea how it can be implemented , It’s a great pivot and fits well with my background in pattern recognition.To make sure this stays professional and doesn’t end up as just another ‘minor project,’ I’ve been thinking through the architecture to avoid the usual bottlenecks.

The Strategy: My main concern is hardware overhead-standard CNNs for video processing usually make my laptop (and most users’ machines) hang instantly. To solve this, I’m planning to use MediaPipe to strip away the raw pixel data and just extract 21 3D hand landmarks.Processing those coordinates (x, y, z) is 100x lighter than processing full frames. For the actual ‘intelligence,’ I’m looking at using a Temporal Model (likely an LSTM or a lightweight Transformer). Static frames don’t capture the ‘flow’ of sign language, so I want to focus on the sequence of movement over time.I’ll also bring in Optuna (which I used in my recent NIDS paper) to fine-tune the model so we get high accuracy without sacrificing the real-time FPS. I’ll get a repo started and build out a functional demo to show you. Looking forward to it and hope we stay connected!

I wish you all the best in this effort, and look forward to seeing what you come up with. I would not worry about fps/ latency for the moment, but rather about being able to correctly classify/detect sign language gestures for automatic sign transcription, and seeing how far you go. Of course, there are many ongoing efforts in this area, some even open source, that you could refer to /consult/borrow from/be inspired by. Good luck.

@suresh.krishna

Thank you for guidance.

I will definitely implement and come to u as soon as optimal solution is there.

Are you planning to bring this project for Gsoc2026?

see above…

Yes sir , already working on it . Can you share any info where I can contact you regarding any feedback or improvments. I will share a good proposal for this with proof of work as a prototype which can be scalable and can work on for 3 months.

Hello Suresh sir,

Following up on our earlier discussion around gesture-based input, I wanted to share what I’ve been working on. I’ve built a working prototype to validate the idea end-to-end rather than just keeping it conceptual.

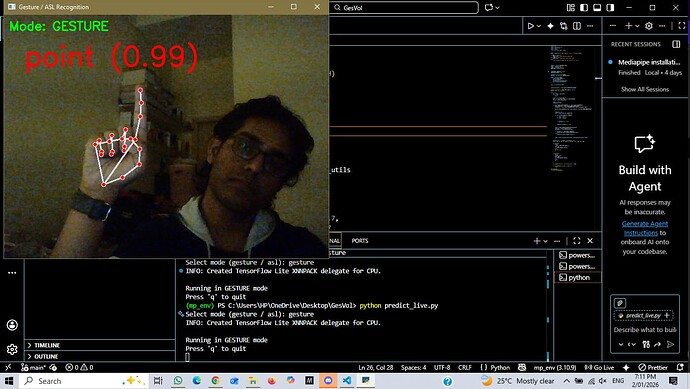

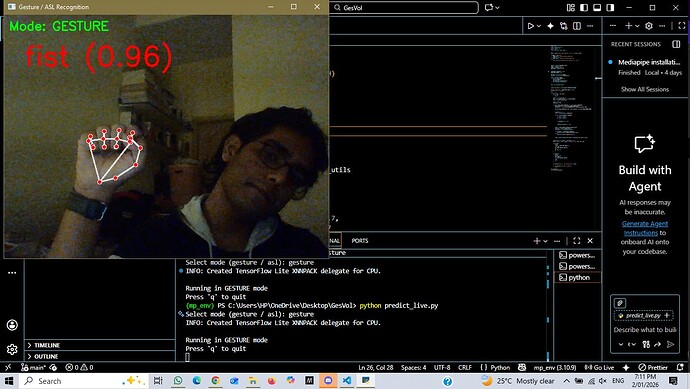

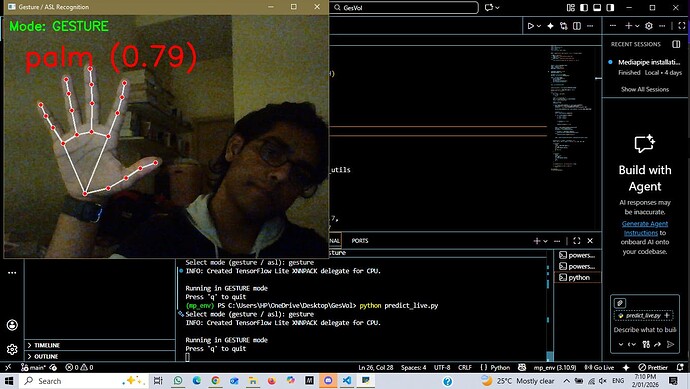

The current prototype uses a camera + MediaPipe hand landmarks and supports two scoped modes:

• Basic gesture recognition (fist, palm, point)

• ASL static fingerspelling prototype (limited subset, static signs only)

I focused on building a clean and controlled pipeline rather than optimizing for scale. The system uses landmark normalization relative to the wrist, left/right hand mirroring, confidence-based rejection for unknown gestures, and temporal smoothing to stabilize live predictions.

I intentionally kept the ASL part scoped to static signs to avoid over-claiming, and treated this as a prototype to validate the representation and identify limitations. Things like temporal modeling, continuous signing, and broader generalization are clearly next steps rather than solved problems.

This helped me understand practical issues like confidence calibration, live vs offline behavior, and hand invariance, and gave me a clearer idea of what would be meaningful to work on next.

I’d really appreciate any feedback on whether this direction aligns with what you had in mind, or if you think there’s a better way to frame or extend it.

Github-> GitHub - ayu-yishu13/Gesture_recog: Its a prototype for gesture recognition and signs

If you want I can attach video too . I would like too here feedback.

Thanks!

Hi @suresh.krishna Sir!

I would also like to do some of my practical experiments on this project, if you would allow me to. I am also looking forward (If that project comes up again in 26) , to implement that suggestion too.

Can you guide me how and where to begin? I do have enough potential knowledge of Computer vision as well since I have made projects!

Cheers!!

Thank you for the clarification.

Based on your example, I believe I can meaningfully contribute to projects within your organization that involve camera-based perception and ML-driven interpretation of visual input. I’ve already been working on a real-time road hazard detection system using computer vision and deep learning, which gives me hands-on experience with end-to-end pipelines — data preparation, model design, evaluation, and deployment.

I’m now translating this experience into a concrete, feasible GSoC-style proposal, aligned with your organization’s goals, with clear milestones, evaluation criteria, and a working prototype as proof-of-work.

My aim is not just to propose an idea, but to reliably execute and contribute code, documentation, and reproducible results over a full 12-week GSoC 2026 timeline. I’d really value the opportunity to share a draft proposal and early prototype for feedback to ensure strong alignment with your expectations.

Please do not use an LLM to write your messages. If you are not, then that is fine.