Hi everyone,

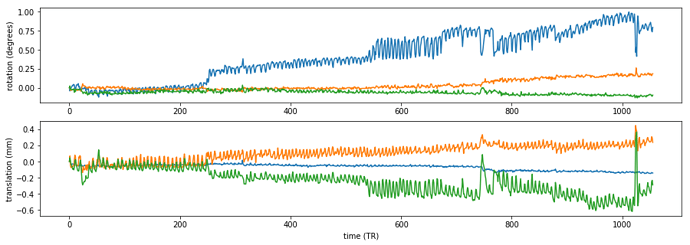

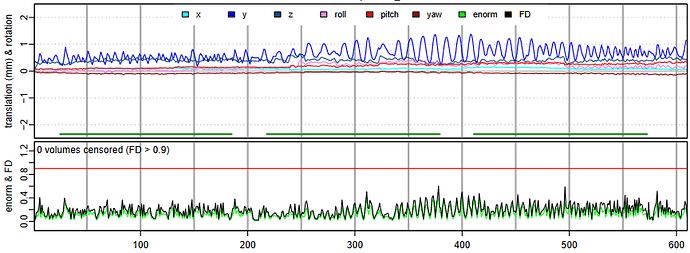

fMRI data with a high TR of 500ms is able to pick up breathing oscillation, as you can see from the following estimated motion parameters.

The Data

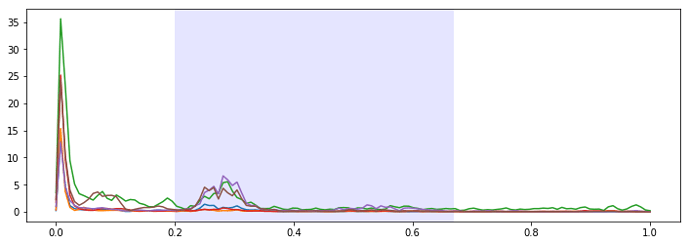

Now, this is clearly something that needs to be taken care of. Looking at the power spectrum in the motion parameters, we can see that the frequency band of those oscillations is between 0.2 and 0.67Hz (see also this).

So the best approach would probably be to filter those frequencies out.

The Problem

Just applying temporal filters to the motion corrected NIfTI is problematic (see Lindquist et al. (2018)).

I think the best approach would be to (1) either temporally filter the motion parameters and apply them to the uncorrected image or (2) temporally filter the uncorrected image, estimated motion parameters and apply those motion parameters to the uncorrected image.

I’m not able to implement (1), but I think I found a workaround with (2).

The Questions

- How do others solve this issue?

- Is it possible to use the (manipulated/filtered) motion parameters to reslice/motion correct images?

The only feasible approach I was able to implement, was to use FSL’s mcflirt, save the transformation matrices for each volume, and then apply them with applyxfm4d.

- Strategy (2) only works if

high_pass is set to None (see here why it doesn’t work if high_pass=1./128). Otherwise, the initial filtering will remove also slow drifts, which means, that those slow drifs will stay in the data after applyxfm4d, which defeats the whole purpose of motion correction.

- Does anybody know how this

applyxfm4d step could be done with nibabel or nilearn?

For a much more detailed explanation of this post, and the code to create the figures, see this notebook.

cheers,

michael

1 Like

Yes, this looks very much like breathing. You didn’t mention voxel size; I’d guess that in addition to the fast TR you have voxels less than 2 mm isotropic (and multiband/SMS?) - it seems that the small voxels often drive the apparent motion more than the fast TR. This post (http://mvpa.blogspot.com/2017/09/yet-more-with-respiration-and-motion.html) summarizes some of what I’ve explored with this; check the links in the first paragraph for more (particularly https://practicalfmri.blogspot.com/2016/10/motion-traces-for-respiratory.html).

For your questions, I don’t think there’s at all a consensus on what to do about apparent motion. I’m part of a large multi-session (mostly) task fMRI study (https://pages.wustl.edu/dualmechanisms), and what we’re doing so far is documenting and quantifying the apparent motion, but not changing our preprocessing. I haven’t checked the proportion lately, but something like a third to half of the participants show a prominent respiration-linked apparent motion. The breathing is linked to the task timing in one case (verbal-response Stroop), and appears entrained in other tasks, so I am hesitant to do too much filtering. We are currently gearing up to process all of our physio recordings through physIO to extract the max inhalations/exhalations and heart beats, to allow quantifying the entrainment, and hopefully give some hint as to how to proceed.

The considerations are a bit different for resting state, of course. We’re currently using a fairly comprehensive preprocessing based on the HCP pipelines and Siegel 2014 (DOI: 10.1002/hbm.22307), but again, the same for people with and without apparent motion.

Oh, I also have a suspicion (not proven; I’d be most curious if anyone has explored it) that respiration-linked apparent motion does not affect every voxel equally, but rather varies, perhaps in the superior/inferior direction. This suspicion (plus the task-entrainment) also makes me hesitant to get too aggressive with whole-brain filtering.

2 Likes

Thank you @jaetzel for the links. The blog posts are very informative! The voxel size is 2 x 2 x 3 mm. And yes, SMS with an acceleration factor of 3, (21 slices total).

Interesting, so you don’t see this pattern in all subjects? I’m curious if this due to the 800ms TR. So far, I’ve only recorded 3 pilots, but I saw this oscillation in all of them.

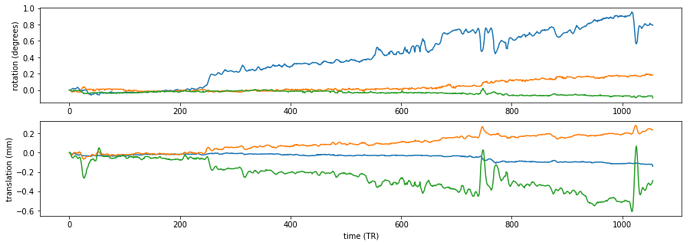

About the “best” approach, I’m somehow not so comfortable to do motion correction according to those “breathing confounded” parameters. That’s why I’m inclined to low-pass the motion parameters (see the following figure) and use them for the actual motion correction.

It feels better because they look much more like “normal” motion estimates. But you’re right this all needs more exploration.

I’ve explored here a bit more how the power spectrum looks like if you perform normal motion correction or motion correction on low pass filtered data. But I’m not sure what the conclusion should be.

1 Like

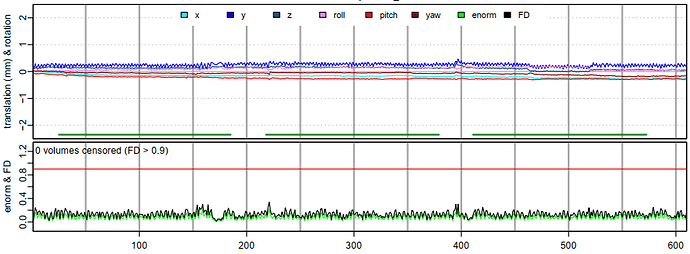

To be more precise, we see an oscillation in pretty much everyone, but the magnitude varies substantially. These are runs from two different people, both doing the same task (vertical grey lines are 1 minute intervals; 2.4 mm isotropic voxels, TR 1.2 s; CMRR MB 4).

We consider this first quite good: very little overt head motion, small oscillations.

This second also has very minimal overt head motion, but much larger oscillations; ~1 mm like this person is about as big as I’ve seen with our acquisition parameters.

Oh, and it can be quite useful to look at the actual volumes (e.g., as a movie with mango) before and after preprocessing; the apparent motion can be very obvious - the person may not have been moving, but the brain in the fMRI image certainly is, so using the “breathing confounded” parameters doesn’t strike me as too crazy.

The following manuscript describes this problem, and proposes filtering the motion numbers themselves for use in Friston regression and motion scrubbing. Correction of motion matrices for adjusting the auto-alignment of fMRI was not performed here.