Hey everyone,

I hope y’all are doing fine.

I had a bit of fun with nilearn.glm.cluster_level_inference and observed some puzzling outputs I can’t quite understand. Thus, I thought I would reach out here and ask the hivemind.

I would like to use nilearn.glm.cluster_level_inference as an alternative to cluster thresholding/correction and was happy to see a nilearn implementation of Rosenblatt et al. 2018. I would like to use this method to provide additional information to contrasts of interest, ie in addition to “classically” thresholded images.

I started following this tutorial and everything seemed fine. However, I realized I erroneously used fpr-corrected images as input. After correcting my mistake and using z-maps as input the proportion_true_discoveries_img is empty and I also get an UserWarning: Given img is empty. warning. .

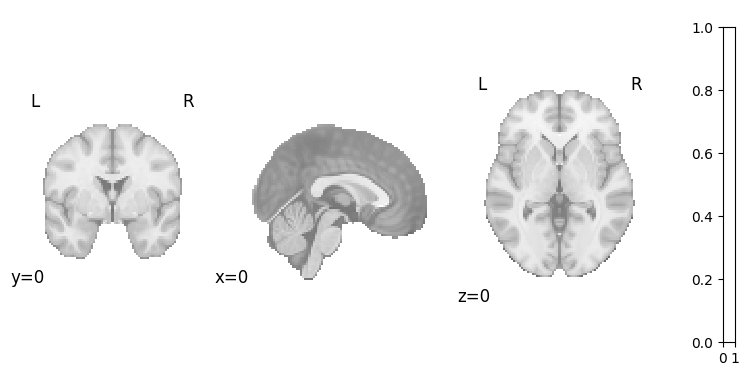

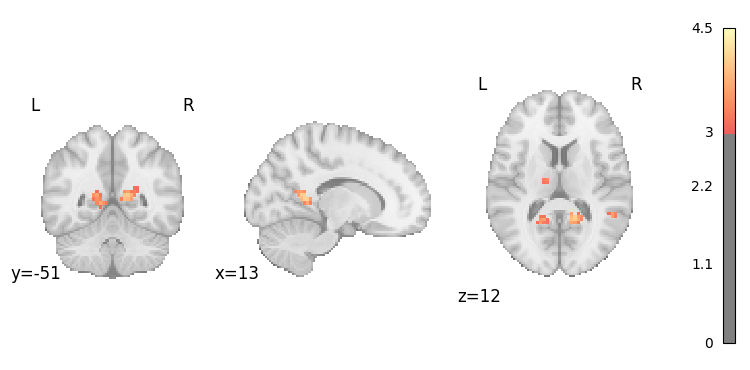

Here are the images for reference. At first the z-map and the respective proportion_true_discoveries_img:

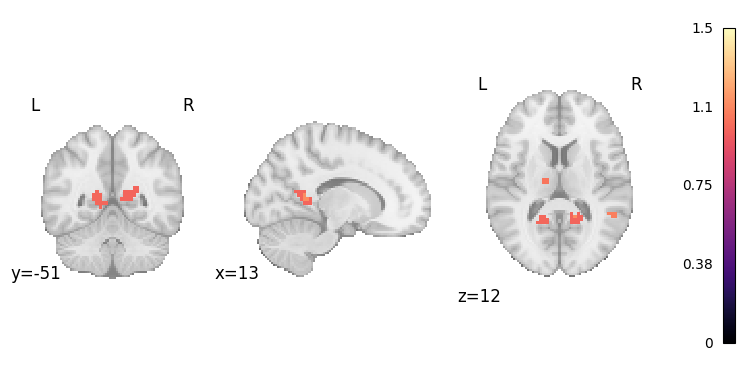

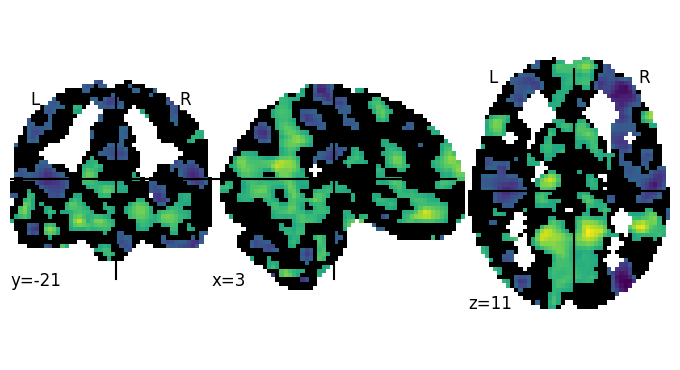

Second, the fpr-corrected z-map and respective proportion_true_discoveries_img:

Does anyone maybe have an idea what’s going on here? Sorry if my explanation is somewhat confusing, please just let me know if you have questions/need more information.

Cheers, Peer

P.S.: I saw that the code of nilearn.image.threshold_stats_img has an "all-resolution-inference" option, which, however, is not used. Is this functionality that might be added in one of the next releases?