I downloaded OASIS 3 dataset in BIDS format using the Github script.

However, it got so many errors from the BIDS validator. Any tips on how to update the bids format?

I also tried to use clinica to convert PET and Tw1 from OASIS3 to bids format. It worked fine. However, I need almost all the modalities. I also need the func and anat ones of MRI.

Hi @Yasmine, and welcome to neurostars!

Without seeing your errors specifically and an example subject’s data (e.g., with the tree command), it is hard to help, but there is this note in the documentation:

Notes on OASIS3 and OASIS4 BIDS formatting

The OASIS3 and OASIS4 BIDS files use version 1.0.1 of the BIDS specification, so if you plan to use a BIDS validator on downloaded OASIS data, make sure your validator software can validate to that version. This is an older version of the BIDS specification and there may be formatting that does not match current specifications or fields that were not incorporated into the BIDS specification until later in its development. The OASIS3 and OASIS4 BIDS files will not be updated to newer BIDS specifications.

One specific conflict that has been found with newer BIDS specifications is the labeling of the task name for OASIS BOLD scans. For any OASIS3 and OASIS4 BOLD data the task name should be “rest”. If you require OASIS JSON files to match a newer BIDS specification, you can modify your downloaded copy of any BOLD scan JSON files to meet the specification version you require.

Best,

Steven

I am currently trying the ones I got using clinica.

I have PET and Tw1 in bids format.

I then used the following command to apply the preprocessing.

fmriprep-docker C:\Users\yasmi\Desktop\Oasis\BIDS C:\Users\yasmi\Desktop\Oasis\BIDS\derivatives --fs-license-file C:/Users/yasmi/Desktop/Oasis/license.txt --skip-bids-validation --anat-only

However I am getting lots of errors.

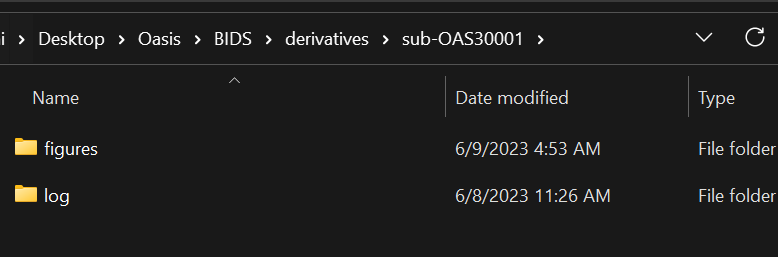

Here is the preprocessed folders and errors:

Node: fmriprep_23_0_wf.single_subject_OAS30001_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

Working directory: /tmp/work/fmriprep_23_0_wf/single_subject_OAS30001_wf/anat_preproc_wf/anat_template_wf/anat_ref_dimensions

Node inputs:

max_scale = 3.0

t1w_list = <undefined>

Traceback (most recent call last):

File "/opt/conda/lib/python3.9/site-packages/nipype/pipeline/plugins/multiproc.py", line 67, in run_node

result["result"] = node.run(updatehash=updatehash)

File "/opt/conda/lib/python3.9/site-packages/nipype/pipeline/engine/nodes.py", line 527, in run

result = self._run_interface(execute=True)

File "/opt/conda/lib/python3.9/site-packages/nipype/pipeline/engine/nodes.py", line 645, in _run_interface

return self._run_command(execute)

File "/opt/conda/lib/python3.9/site-packages/nipype/pipeline/engine/nodes.py", line 771, in _run_command

raise NodeExecutionError(msg)

nipype.pipeline.engine.nodes.NodeExecutionError: Exception raised while executing Node anat_ref_dimensions.

Traceback:

Traceback (most recent call last):

File "/opt/conda/lib/python3.9/site-packages/nipype/interfaces/base/core.py", line 397, in run

runtime = self._run_interface(runtime)

File "/opt/conda/lib/python3.9/site-packages/niworkflows/interfaces/images.py", line 436, in _run_interface

orig_imgs = np.vectorize(nb.load)(in_names)

File "/opt/conda/lib/python3.9/site-packages/numpy/lib/function_base.py", line 2304, in __call__

return self._vectorize_call(func=func, args=vargs)

File "/opt/conda/lib/python3.9/site-packages/numpy/lib/function_base.py", line 2382, in _vectorize_call

ufunc, otypes = self._get_ufunc_and_otypes(func=func, args=args)

File "/opt/conda/lib/python3.9/site-packages/numpy/lib/function_base.py", line 2342, in _get_ufunc_and_otypes

outputs = func(*inputs)

File "/opt/conda/lib/python3.9/site-packages/nibabel/loadsave.py", line 115, in load

raise ImageFileError(msg)

nibabel.filebasedimages.ImageFileError: File /data/sub-OAS30001/ses-M006/anat/sub-OAS30001_ses-M006_T1w.nii.gz is not a gzip file

Can you guide me on this please ?

Also is there a solution for the large data taking all the disk space

230609-11:30:30,440 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31085_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,445 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31086_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,449 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31087_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,454 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31088_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,459 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31089_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,463 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31090_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,468 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31091_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,472 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31092_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,477 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31093_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,482 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31094_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions

230609-11:30:30,486 nipype.workflow ERROR:

could not run node: fmriprep_23_0_wf.single_subject_OAS31096_wf.anat_preproc_wf.anat_template_wf.anat_ref_dimensions```That is not enough information, it would help to see what files are in the folders. Please use the tree command or something similar.

Not easily, no. You could try to upload data to Brainlife.io and run analyses there.

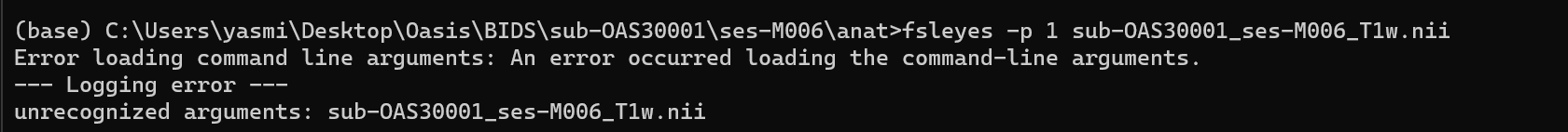

Are you able to load that file with a MRI viewer software (such as fsleyes)? What is the file size?

Best,

Steven

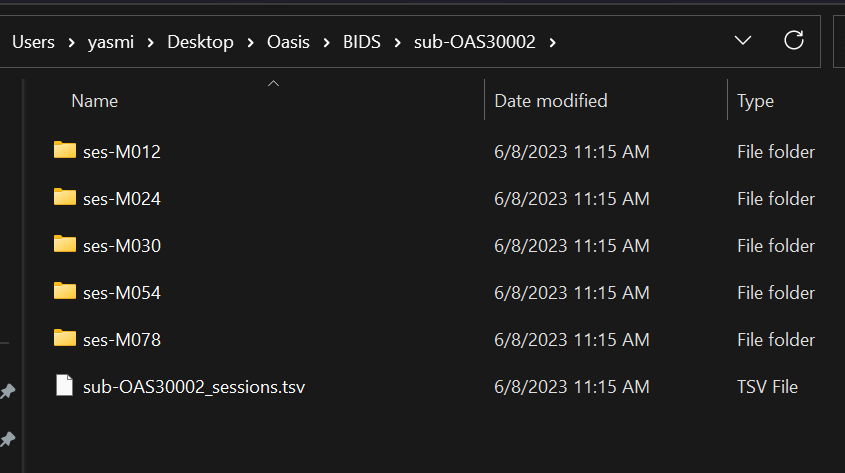

Yes. Excuse me. Here is the tree for a few subjects in the bids folder:

+---sub-OAS31165

| | sub-OAS31165_sessions.tsv

| |

| +---ses-M012

| | \---anat

| | sub-OAS31165_ses-M012_T1w.nii.gz

| |

| \---ses-M036

| \---anat

| sub-OAS31165_ses-M036_T1w.nii.gz

|

+---sub-OAS31166

| | sub-OAS31166_sessions.tsv

| |

| \---ses-M030

| \---anat

| sub-OAS31166_ses-M030_T1w.nii.gz

|

+---sub-OAS31167

| | sub-OAS31167_sessions.tsv

| |

| +---ses-M000

| | \---anat

| | sub-OAS31167_ses-M000_T1w.nii.gz

| |

| +---ses-M024

| | \---anat

| | sub-OAS31167_ses-M024_T1w.nii.gz

| |

| +---ses-M066

| | \---anat

| | sub-OAS31167_ses-M066_T1w.nii.gz

| |

| +---ses-M114

| | \---anat

| | sub-OAS31167_ses-M114_T1w.nii.gz

| |

| \---ses-M150

| \---anat

| sub-OAS31167_ses-M150_T1w.nii.gz

|

+---sub-OAS31168

| | sub-OAS31168_sessions.tsv

| |

| +---ses-M006

| | \---anat

| | sub-OAS31168_ses-M006_T1w.nii.gz

| |

| +---ses-M054

| | \---anat

| | sub-OAS31168_ses-M054_T1w.nii.gz

| |

| +---ses-M084

| | \---anat

| | sub-OAS31168_ses-M084_T1w.nii.gz

| |

| \---ses-M114

| \---anat

| sub-OAS31168_ses-M114_T1w.nii.gz

|

+---sub-OAS31169

| | sub-OAS31169_sessions.tsv

| |

| \---ses-M018

| \---anat

| sub-OAS31169_ses-M018_T1w.nii.gz

|

+---sub-OAS31170

| | sub-OAS31170_sessions.tsv

| |

| \---ses-M078

| \---anat

| sub-OAS31170_ses-M078_T1w.nii.gz

|

+---sub-OAS31171

| | sub-OAS31171_sessions.tsv

| |

| \---ses-M024

| \---anat

| sub-OAS31171_ses-M024_T1w.nii.gz

|

\---sub-OAS31172

| sub-OAS31172_sessions.tsv

|

+---ses-M012

| \---anat

| sub-OAS31172_ses-M012_T1w.nii.gz

|

\---ses-M054

\---anat

sub-OAS31172_ses-M054_T1w.nii.gz

Not all subjects have PET

| | sub-OAS30587_sessions.tsv

| |

| \---ses-M150

| +---anat

| | sub-OAS30587_ses-M150_T1w.nii.gz

| |

| \---pet

| sub-OAS30587_ses-M150_trc-18FAV45_pet.nii.gz

|

+---sub-OAS30588

| | sub-OAS30588_sessions.tsv

| |

| +---ses-M012

| | +---anat

| | | sub-OAS30588_ses-M012_T1w.nii.gz

| | |

| | \---pet

| | sub-OAS30588_ses-M012_trc-11CPIB_pet.nii.gz

Also I am trying the following command

but nothing seems to start after the command

fsleyes -p 1 sub-OAS30001_ses-M006_T1w.nii

What is the file size of the T1 image that is causing problems, and what about running fslinfo on it?

May you tell me how can I get fsl info?

In the derivatives folder, it seems that all files have the same log errors

fslinfo is a command in FSL. And that file size is way too small to be a T1 image. I have not worked with OASIS data so I cannot say why it did not seem to download completely.

Thank you

May you recommend me a dataset please that would be easier to find resources for.

Maybe I can resume working on the OASIS 3 dataset when I am more experienced.

I need a multimodal dataset.