Hi all,

I’m trying to run a searchlight analysis but because of the size of the data I need to parallel process by splitting my nifti image into slices on axis=0. (Shape is 91,191,91; and I am going to loop over the first axis).

I believe this can be done but I want to make sure that the radius around each of the processed voxels remains the same.

I am using Nilearn, nilearn.decoding.SearchLight, if I simply use the slice of shape (191,91) as my *process_mask_img and my full mask as my **mask_img will that be enough?

Want to be sure before I run it on the hpc

The class nilearn.decoding.SearchLight has a parameter n_jobs which is by default set to 1 (specifying 1 CPU should be used) but it can be set to any positive integer, representing the number of CPUs to be used in the computation. Alternatively, you can set this parameter value to -1 which will then use all available CPUs to parallelise the computation. I wouldn’t suggest trying to do this in the way you suggested.

Check out scikit-learn’s page on Parallelism if you want to know more. There you can read more about the role of the n_jobs parameter:

The n_jobs parameters of estimators always controls the amount of parallelism managed by joblib (processes or threads depending on the joblib backend). The thread-level parallelism managed by OpenMP in scikit-learn’s own Cython code or by BLAS & LAPACK libraries used by NumPy and SciPy operations used in scikit-learn is always controlled by environment variables or threadpoolctl as explained below.

Let us know how you get on and if this solved your problem.

Is there a reason it would be bad to do it my way? I was able to make it work using my way. Are you saying results might be different if I use their built in n_jobs function?

I would imagine that is the case. The SearchLight class takes a parameter radius which defines the dimensions of the sphere used to collect the voxel information to be provided to a function which defines the computation to be done on that data (e.g. a linear classifier). If you split a volume up into 2D slices, you are only going to be able to extract information along the plane in a 2D manner.

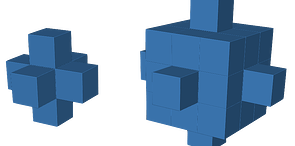

In the nilearn searchlight example on the Haxby dataset, for example, the radius parameter is set to 5.6 (mm) which defines a “sphere” like this:

The radius is larger than the typical slice thickness (2-3 mm) of a slide of fMRI.

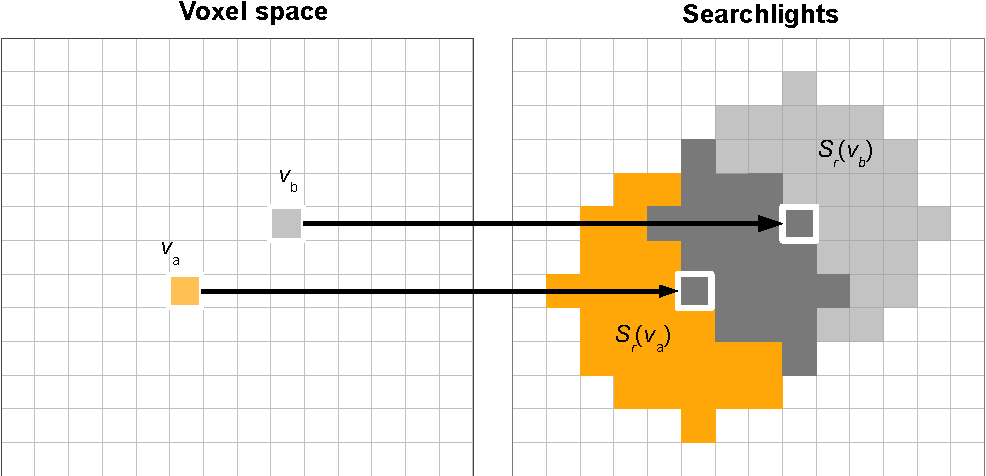

In your case, by looping over the first dimension, you’ll only be considering things in 2D, like this:

So you will still get working code and possibly good results, but it’s missing the idea of a spherical shape scanning across the brain and taking advantage of voxel information in adjacent slices. I’m not sure exactly how this process is parallelised by using the n_jobs parameter, but I’m pretty sure it will not reduce the computation to 2D within-slice voxel locations.

I don’t have time to check the source code right now, but I’m pretty comfortable in saying that what your code does would be different to the suggested way to parallelise this computation. My experience is to always try to rely on the battle-tested code provided by a library such as nilearn rather than potentially introducing unintended consequences in ad hoc user implementations.

If you had a radius of 6 mm and then wrote in a paper that you used a searchlight with that radius, a reader would assume it was like the first image I posted above (3D) but your computation would be doing something in 2D (like the 2nd image).

There’s nothing wrong, per se, in doing it your way, if you have a specific reason to justify it. Just make sure that you’re explicit in your reporting that it was done in that way. I think you’re potentially losing out on useful neighbouring voxel information by implementing the searchlight in the way you suggested.

I understand, results were not good so them potentially improving is good to hear.

I wanted to clarify though, the searchlight class has two inputs for masks; mask and process_mask. I was assuming that process_mask simply showed which voxels to check and then “mask” would control for the voxels that would have information. Meaning that even though I would only be processing a 2D slice, it would represent only processing all the voxels in that slice but each voxel sphere would work as normal.

If I understand your use case correctly, you can indeed perform searchlights within a slice by selecting that slice with the mask argument.

HTH,

Bertrand

1 Like