Summary of what happened:

I am running QSIPrep version 1.0.1 using SLURM on few studies. The pipeline fails saying ''Merge_dwis failed to run on host" for on of the studies. Could you help me to identify what might be wrong or setting up the right parameters?

Command used (and if a helper script was used, a link to the helper script or the command generated):

Here is my code:

list=$1

sub=`awk NR==${SLURM_ARRAY_TASK_ID} ${list}`

singularity run --cleanenv --containall -e \

-B ${WORK_DIR}/:/work/ \

-B ${BIDS_DIR}:/data/ \

-B ${OUT_DIR}/:/out/ \

-B ${PIPELINE_DIR}:/indir/ \

/indir/qsiprep-1.0.1.sif \

/data/ /out/ participant \

--ignore fieldmaps t2w flair phase \

--fs-license-file /indir/license.txt \

--separate-all-dwis \

--participant-label ${sub} \

--distortion-group-merge none \

--anat-modality T1w \

--bids-filter-file /indir/Scripts/bids_filter.json \

--mem-mb 88000 \

--nthreads 4 \

--work-dir /work/ \

--hmc-model 3dSHORE \

--unringing-method rpg \

--output-resolution 2 \

Version:

qsiprep:1.0.1

Environment (Docker, Singularity / Apptainer, custom installation):

apptainer build ${PWD}/qsiprep-1.0.1.sif docker://pennlinc/qsiprep:1.0.1

Data formatted according to a validatable standard? Please provide the output of the validator:

Input data appears valid and follows BIDS structure.

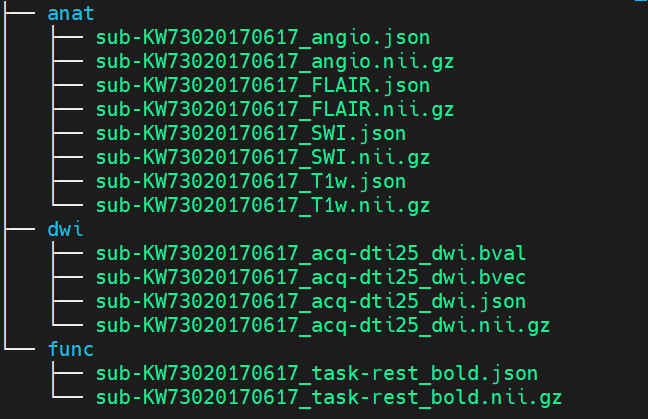

example input:

tree Data/Init_Downloaded/scans/All/batch_01/sub-OAS30001/

Data/Init_Downloaded/scans/All/batch_01/sub-OAS30001/

├── ses-d0757

│ ├── anat

│ │ ├── sub-OAS30001_ses-d0757_acq-TSE_T2w.json

│ │ ├── sub-OAS30001_ses-d0757_acq-TSE_T2w.nii.gz

│ │ ├── sub-OAS30001_ses-d0757_run-01_T1w.json

│ │ ├── sub-OAS30001_ses-d0757_run-01_T1w.nii.gz

│ │ ├── sub-OAS30001_ses-d0757_run-02_T1w.json

│ │ ├── sub-OAS30001_ses-d0757_run-02_T1w.nii.gz

│ │ ├── sub-OAS30001_ses-d0757_T2star.json

│ │ ├── sub-OAS30001_ses-d0757_T2star.nii.gz

│ │ ├── sub-OAS30001_ses-d0757_T2w.json

│ │ └── sub-OAS30001_ses-d0757_T2w.nii.gz

│ ├── dwi

│ │ ├── sub-OAS30001_ses-d0757_dwi.bval

│ │ ├── sub-OAS30001_ses-d0757_dwi.bvec

│ │ ├── sub-OAS30001_ses-d0757_dwi.json

│ │ └── sub-OAS30001_ses-d0757_dwi.nii.gz

│ └── func

│ ├── sub-OAS30001_ses-d0757_task-rest_run-01_bold.json

│ ├── sub-OAS30001_ses-d0757_task-rest_run-01_bold.nii.gz

│ ├── sub-OAS30001_ses-d0757_task-rest_run-02_bold.json

│ └── sub-OAS30001_ses-d0757_task-rest_run-02_bold.nii.gz

├── ses-d2430

│ ├── anat

│ │ ├── sub-OAS30001_ses-d2430_acq-TSE_T2w.json

│ │ ├── sub-OAS30001_ses-d2430_acq-TSE_T2w.nii.gz

│ │ ├── sub-OAS30001_ses-d2430_FLAIR.json

│ │ ├── sub-OAS30001_ses-d2430_FLAIR.nii.gz

│ │ ├── sub-OAS30001_ses-d2430_T1w.json

│ │ ├── sub-OAS30001_ses-d2430_T1w.nii.gz

│ │ ├── sub-OAS30001_ses-d2430_T2star.json

│ │ └── sub-OAS30001_ses-d2430_T2star.nii.gz

│ ├── dwi

│ │ ├── sub-OAS30001_ses-d2430_run-01_dwi.bval

│ │ ├── sub-OAS30001_ses-d2430_run-01_dwi.bvec

│ │ ├── sub-OAS30001_ses-d2430_run-01_dwi.json

│ │ ├── sub-OAS30001_ses-d2430_run-01_dwi.nii.gz

│ │ ├── sub-OAS30001_ses-d2430_run-02_dwi.bval

│ │ ├── sub-OAS30001_ses-d2q430_run-02_dwi.bvec

│ │ ├── sub-OAS30001_ses-d2430_run-02_dwi.json

│ │ └── sub-OAS30001_ses-d2430_run-02_dwi.nii.gz

│ ├── fmap

│ │ ├── sub-OAS30001_ses-d2430_run-01_magnitude1.json

│ │ ├── sub-OAS30001_ses-d2430_run-01_magnitude1.nii.gz

│ │ ├── sub-OAS30001_ses-d2430_run-01_magnitude2.json

│ │ ├── sub-OAS30001_ses-d2430_run-01_magnitude2.nii.gz

│ │ ├── sub-OAS30001_ses-d2430_run-01_phasediff.json

│ │ └── sub-OAS30001_ses-d2430_run-01_phasediff.nii.gz

│ └── func

Relevant log outputs (up to 20 lines):

[Node] Executing "merge_dwis" <qsiprep.interfaces.dwi_merge.MergeDWIs>

250807-10:55:00,39 nipype.workflow INFO:

[Node] Finished "merge_dwis", elapsed time 0.142085s.

250807-10:55:00,39 nipype.workflow WARNING:

Storing result file without outputs

250807-10:55:00,41 nipype.workflow WARNING:

[Node] Error on "qsiprep_1_0_wf.sub_OAS30001_ses_d0757_d2430_d3132_d3746_d4467_w

f.dwi_preproc_ses_d3132_run_01_wf.pre_hmc_wf.merge_and_denoise_wf.merge_dwis" (/work/qsip

rep_1_0_wf/sub_OAS30001_ses_d0757_d2430_d3132_d3746_d4467_wf/dwi_preproc_ses_d3132_run_01

_wf/pre_hmc_wf/merge_and_denoise_wf/merge_dwis)

250807-10:55:01,718 nipype.workflow ERROR:

Node merge_dwis failed to run on host 2119fmn004.

250807-10:55:01,731 nipype.workflow ERROR:

Saving crash info to /out/sub-OAS30001/log/20250807-104944_2a0cf0e8-37e4-48f9-ad79-64929fc4907a/crash-20250807-105501-oasis-merge_dwis-ce4feded-e445-4542-991a-f21cccacf02d.txt

Traceback (most recent call last):

File "/opt/conda/envs/qsiprep/lib/python3.10/site-packages/nipype/pipeline/plugins/multiproc.py", line 66, in run_node

result["result"] = node.run(updatehash=updatehash)

File "/opt/conda/envs/qsiprep/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 525, in run

result = self._run_interface(execute=True)

File "/opt/conda/envs/qsiprep/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 643, in _run_interface

return self._run_command(execute)

File "/opt/conda/envs/qsiprep/lib/python3.10/site-packages/nipype/pipeline/engine/nodes.py", line 769, in _run_command

raise NodeExecutionError(msg)

nipype.pipeline.engine.nodes.NodeExecutionError: Exception raised while executing Node merge_dwis.

Traceback:

Traceback (most recent call last):

File "/opt/conda/envs/qsiprep/lib/python3.10/site-packages/nipype/interfaces/base/core.py", line 401, in run

runtime = self._run_interface(runtime)

File "/opt/conda/envs/qsiprep/lib/python3.10/site-packages/qsiprep/interfaces/dwi_merge.py", line 76, in _run_interface

to_concat, b0_means, corrections = harmonize_b0s(

File "/opt/conda/envs/qsiprep/lib/python3.10/site-packages/qsiprep/interfaces/dwi_merge.py", line 716, in harmonize_b0s

harmonized_niis.append(math_img(f'img*{correction:.32f}', img=nii_img))

File "/opt/conda/envs/qsiprep/lib/python3.10/site-packages/nilearn/image/image.py", line 1058, in math_img

result = eval(formula, data_dict)

File "<string>", line 1, in <module>

NameError: ("Input formula couldn't be processed, you provided 'img*nan',", "name 'nan' is not defined")