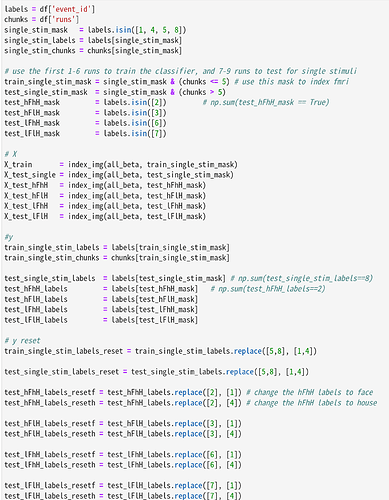

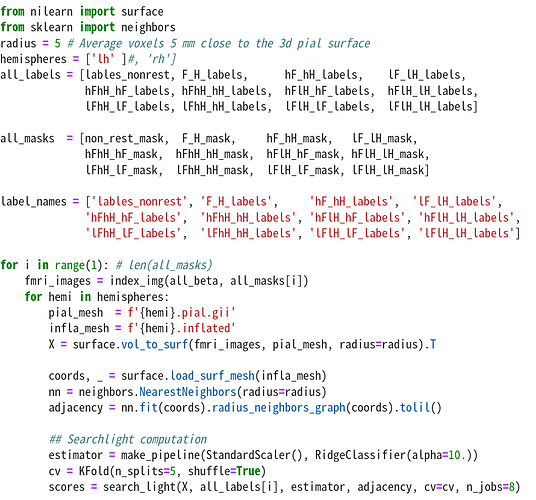

Hi experts, I’m trying to do searchlight decoding based on surface. According to the tutorial (Nilearn: Statistical Analysis for NeuroImaging in Python — Machine learning for NeuroImaging), I can only searchlight decode between two conditions. In my experiment, I have several conditions and I want to split the fmri data into one train dataset and several test datasets. Then I can train a classifier using the train datasets, and test the classfier for test datasets. Here is my dataset labels:

How can I achieve such searchlight analysis on surface?

Any suggestions?

Thanks~

No, searchlight is not limited to two conditions. Its can work for any categorical variable.

The restriction to two conditions in the example is simply for the sake of efficiency, given that searchlight is quite slow.

Does that answer your concern ?

Best,

Bertrand

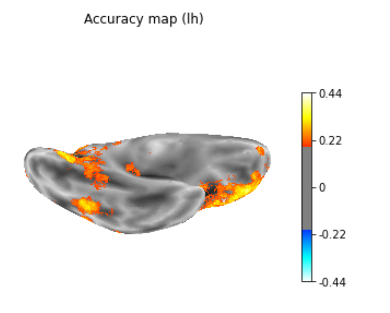

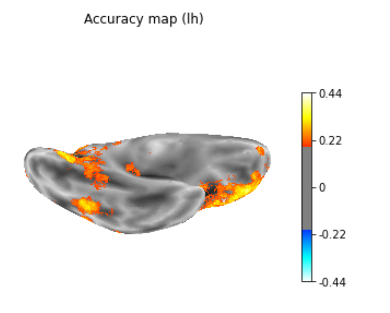

Hi Berteand, Thanks for your timely reply. Actually, my experiment have 8 conditions consisting with face and house category. They are high contrast face (hF), high contrast house (hH), low contrast face (lF), low contrast house (lH), high contrast face overlapping with high contrast house (hFhH), high contrast face overlapping with low contrast house (hFlH), low contrast face overlapping with high contrast house (lFhH), and low contrast face overlapping with low contrast house (lFlH). I have tried the multiple categories decoding, but it tell the difference across multiple categories. However, I want to do searchlight decoding in the whole surface brain, looking for the hit rates on faces and houses under different conditions. In the normalized svm, I can train the classifier using the hF, hH, lF, lH datasets, and get the predict labels form the test overlapping conditions. In this searchlight, I can only get the scores of the imputes data.

I am not sure I understand well what you want to do: do you want to get class-specific accuracies in the searchlight decoding ? I don’t think that this is possible with Nilearn, that only returns a global accuracy value.

Sorry if I did not understand your need…

Best,

Bertrand

Hi, thanks for your reply. I gave up getting class-specific accuracies in the surface searchlight decoding with nilearn. I encountered another obstacle that prevented from moving forward. After I did searchlight between two category, I want to get the accuracy of one defined ROI on surface (such as FFA or PPA), How can I get the accuracy of specified region with nilearn?

You probably have the ROI defined as a texture mapped on the mesh: 1 in the regions, 0 otherwise.

You can then simply mask the array of searchlight values by the ROI texture.

For instance, is the accuracies with size n_vertices represents the above accuracy and ffa represents the binary FFA-defining texture of size n_vertices, then accuracies[ffa] gets you the accuracies in the FFA. This is standard Numpy array indexing.

HTH,

Bertrand