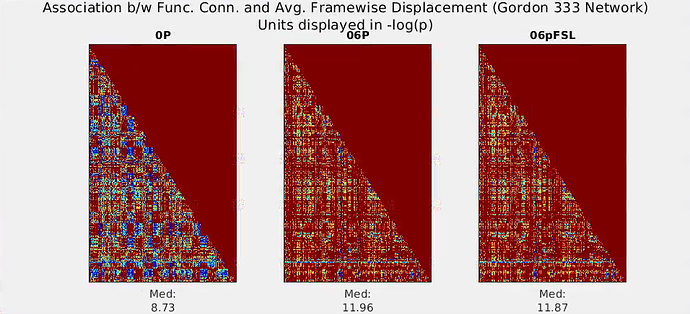

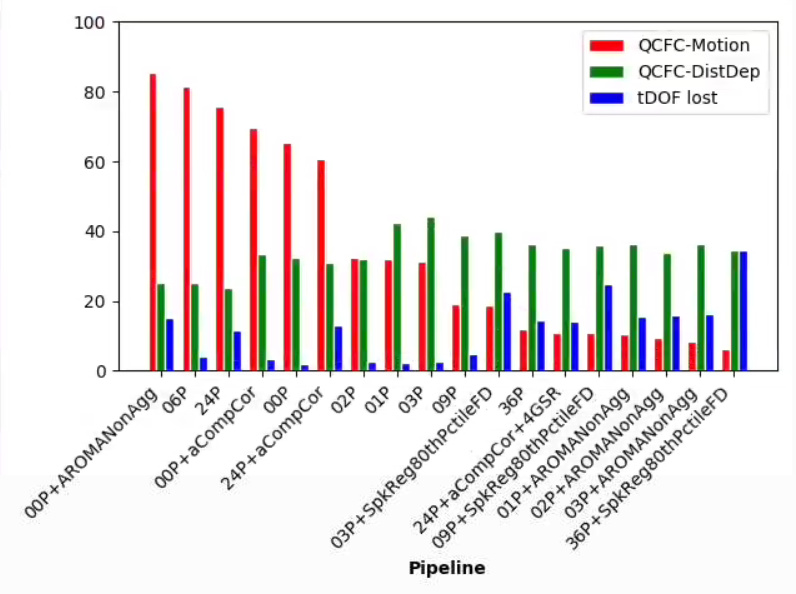

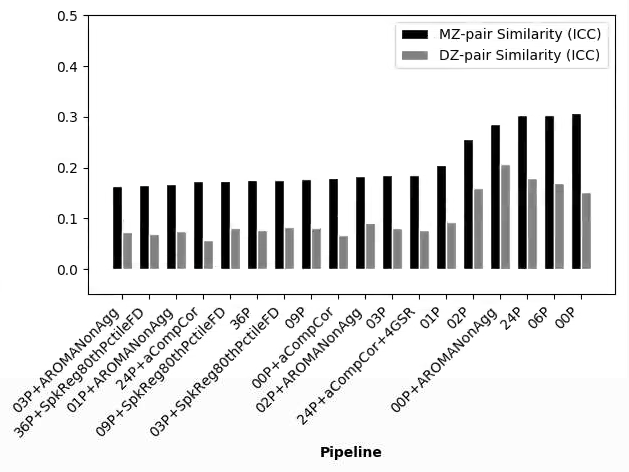

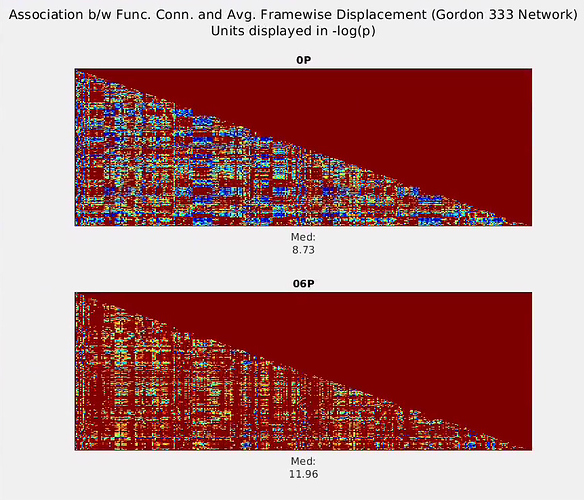

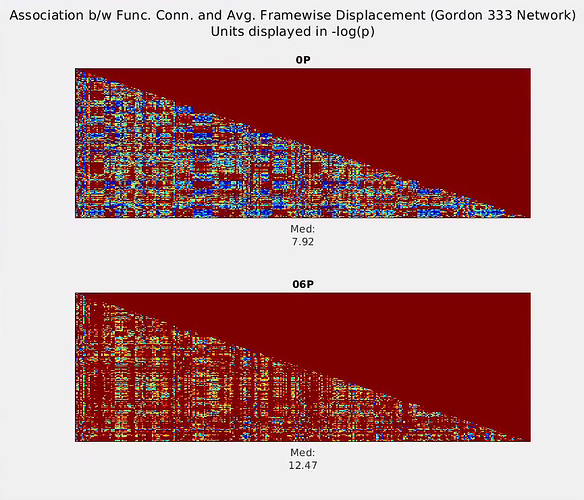

At the moment, I have not found clarity as to what is going on. After several denoising approaches (re-computing the non-aggressive AROMA output, voxel- vs. ROI-wise denoising), I still observe patterns similar to my original post. For the below-explained models, the associations between quality control functional connectivity (QCFC, temporal correlations among Gordon’s 333 ROIs) and motion (mean Framewise Displacement) are worst (largest) for the simple ICA-AROMA model, 6 motion parameter model, etc. Even more peculiar, the association between QCFC and motion for these models is greater than the “00P” model, where only highpass filter cosines and non-steady state outliers were regressed. (I’m developing Python scripts at https://github.com/sjburwell/fmriprep_denoising , if anyone is interested in testing on their own data.)

The below denoising pipeline models regress out the following:

00P: highpass filter cosine functions, non-steady state outlier TR

01P: 00P+global signal

02P: 00P+white matter, csf

03P: 01P+02P

06P: 00P+motion parameters

09P: 03P+06P

24P: 06P+1st difference and quadratic expansion of the parameters

36P: 09P+1st difference and quadratic expansion of the parameters

03+, 09+, 36+SpkReg80thPctileFD: these models, plus spike regression of high motion TRs

00P+, 24P+aCompCor: inclusion of the aCompCor columns

00P+, 01P+, 02P+, 03P+AROMANonAgg: non-aggressive AROMA filtering, done using fsl_regfilt; for 01, 02, and 03 models, global signal, white matter, and csf were estimated after the initial AROMA filtering.

In the above, the red bars pertain to the percentage of edges in the 333 node network significantly related to motion (p < .001), the green bars pertain to the absolute-valued distance-dependence of the functional connectivity (x100, to keep on the same scale as the other bars), and blue bars pertain to the percentage of temporal degrees of freedom lost.

In general, my results are consistent with papers by Ciric et al., (2017) and Parkes et al., (2018), but they do not report 00P models, so I cannot say whether that aspect is consistent or not.

).

).