Hello neurostars,

I’ve been struggling with a problem with the group statistic of my MVP-analysis for a few days, hopefully you can help me.

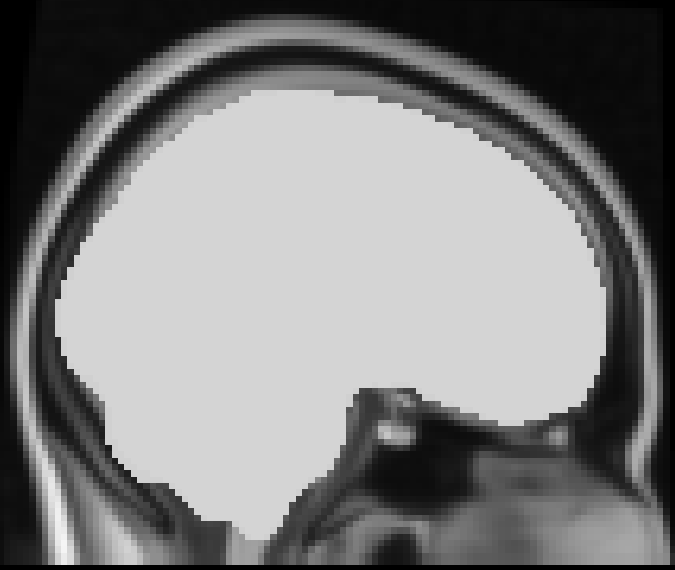

Problem: As soon as I use FSL’s randomise function, I get unrealistic p-values with tfce. Unrealistic because each voxel is significant and contains exactly the same value: 0.9998999834060669 (see below, taken from fsleyes using the tfce_corrp_tstat1 output; thresholds: min=0.95, max=1.009899).

Input: As input I use 21 zscored / smoothed accuracy maps (one from each subject), which I merged with nilearn’s “concat_imgs” function into a 4D image to perform a nonparametric 1-sample t-test. I’ve looked at all maps and they look reasonable.

Permutation test: To be sure that there is no error in Nipype, I ran the test both with Nipype and directly from the command line, both lead to similar results.

# run permutation test

randomise = mem.cache(fsl.Randomise)

randomise_results = randomise(in_file=os.getcwd() + "/concat_dim-sparse-dense.nii.gz",

mask="/usr/share/fsl/data/standard/MNI152_T1_2mm_brain.nii.gz",

one_sample_group_mean=True,

tfce=True,

vox_p_values=True,

num_perm=10000)

Solutions: In the past few days I have run various combinations (with and without masks, fewer or more permutations, with and without tfce, …), but I always get similar results.

Thanks for your time,

Nico